- A shipping manifest has detailed what looks like a professional workstation card

- It could possibly be the successor to Nvidia's RTX 6000 Ada, the most expensive graphics card in the world

- Based on the RTX5090, it is expected to have a whopping 96GB, twice that of it predecessor

The GeForce RTX 5090, the latest flagship graphics card for gamers and creatives in Nvidia's GeForce 50 series, was unveiled at CES 2025 and has just gone on sale - buts hortly before it did, rumors began to swirl of an RTX 5090 Ti model featuring a fully enabled GB202-200-A1 GPU and dual 12V-2×6 power connectors, theoretically allowing for up to 1,200 watts of power.

This speculation began following the appearance of a prototype image on the Chinese industry forum Chiphell - reporting on the image, ComputerBase said, “With 24,576 shaders, the GB202-200-A1 GPU is said to offer 192 active streaming multiprocessors, which were previously rumored to be the full expansion of the GB202 chip. The memory is said to continue to offer 32GB capacity, but with 32Gbps instead of 28Gbps, it will exceed the 2TB/s mark.”

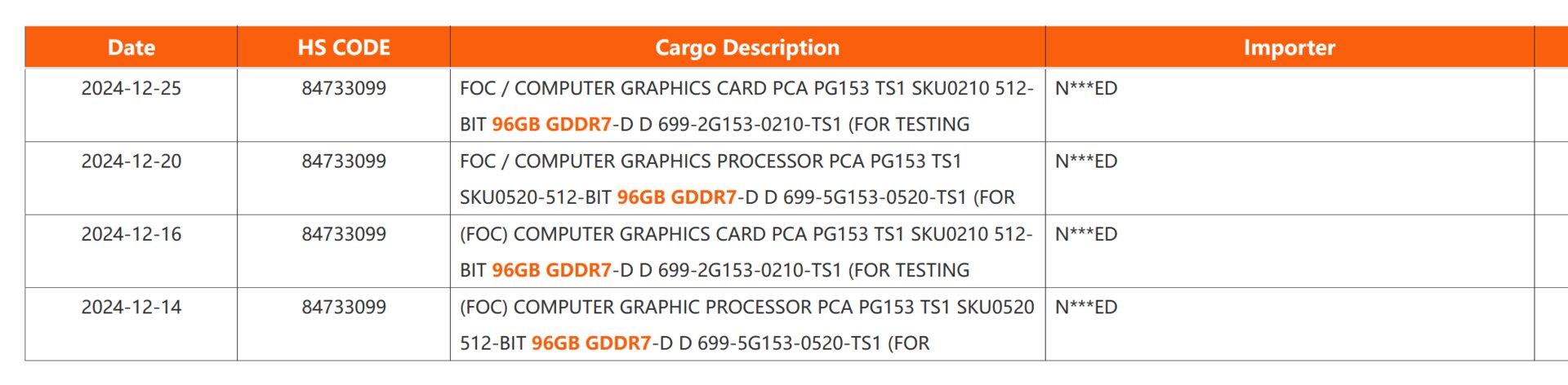

Shortly after the engineering card surfaced online, ComputerBase alsospotted shipping documents on NBD Data listing a graphics card with 96GB of GDDR7 memory, marked as “for testing.” It is a reasonable assumption that this unidentified model is actually a professional workstation card, potentially – let’s say probably – the RTX 6000 Blackwell.

Useful for AI applications

The GeForce RTX 5090 features 32GB of GDDR7, using sixteen 2GB modules connected through a 512-bit memory interface. 48GB would be possible if sixteen 3GB chips were used instead of 2GB chips.

If two of these 3GB chips were connected to each 32-bit controller, placing 16 chips on both the front and back of the graphics card in a "clamshell" configuration, the 96GB mentioned in the documents – which is twice as much as the RTX 6000 Ada, the most expensive graphics card in the world – would become a reality.

The shipping records indicate these GPUs use a 512-bit memory bus, reinforcing this theory. The internal PCB designation PG153, seen in the documents, aligns with known Nvidia Blackwell designs and has not yet appeared in any existing consumer graphics cards.

Nvidia is expected to introduce the RTX Blackwell series for workstations at its annual GPU Technology Conference (GTC 2025), so we should know more about them come March 2025. And yes, if you’re thinking 96GB of GDDR7 memory is overkill for gaming or creative purposes I’d agree with you. It is a good amount for AI tasks though, so we can expect to see Nvidia announce an AI version of the RTX 6000 Blackwell when it finally takes the wraps off its next-gen product.